Understanding AI Prompt Engineering – A Deep Dive into System and User Prompts in 2025

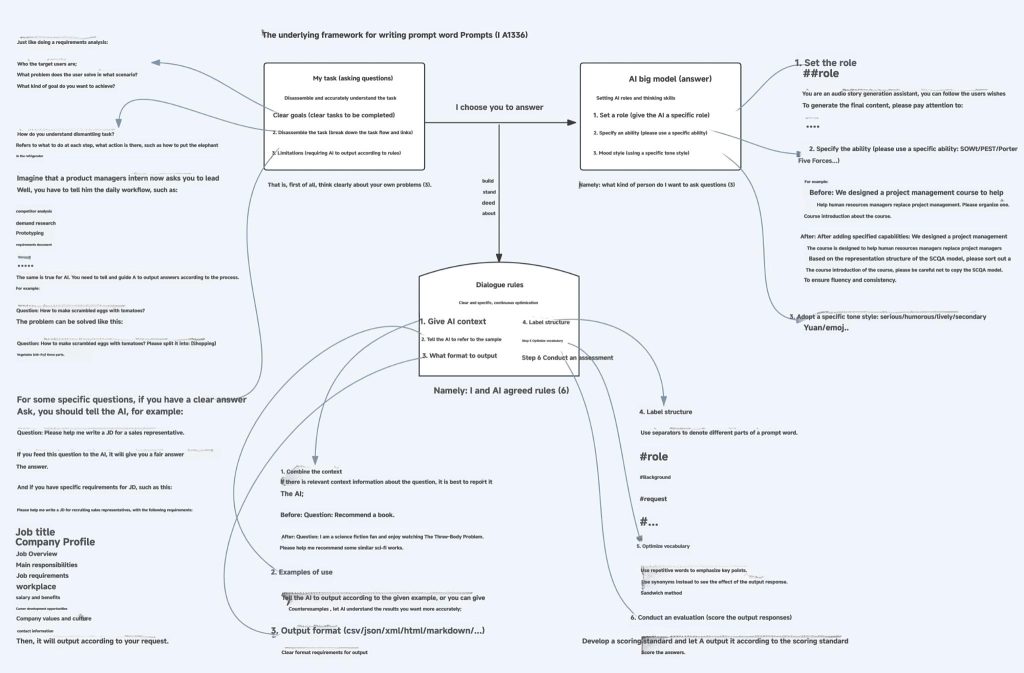

Prompt engineering is the cornerstone of interacting with large language models (LLMs). Whether you're using tools like DeepSeek, Coze, or ChatGPT, understanding how to craft precise prompts transforms vague outputs into targeted solutions. This guide breaks down the underlying framework of AI prompts, empowering you to harness LLMs effectively for any task.

Why Prompts Matter: Your Only Interface with AI

LLMs like those powering modern AI tools operate as probabilistic systems. Once trained, their knowledge remains fixed—output quality depends entirely on your input. Think of prompts as translators between human intent and machine understanding:

- Vague prompts = Inconsistent, unreliable outputs

- Structured prompts = Precise, actionable results

Without clear instructions, even advanced models "guess" your needs, often missing the mark—especially in specialized domains like productivity tools or technical workflows.

Core Components: System vs. User Prompts

🔧 System Prompt: The Invisible Director

- Role: Sets global rules, tone, and constraints (e.g., *"You are a cybersecurity expert analyzing threats"*).

- Application: Pre-configured in apps like AI writing assistants, but customizable in platforms like Coze when building agents.

- Example:

# Role You are a nutritionist. Prioritize evidence-based advice and cite sources. # Constraints - Never recommend unverified supplements - Use layman-friendly terms

💬 User Prompt: Your Specific Request

- Role: The task or question per interaction (e.g., *"Create a meal plan for diabetics"*).

- Includes: Text queries, uploaded documents, or images.

💡 Key Insight: LLMs combine System and User prompts to generate responses. Optimizing both unlocks peak performance.

The Prompt Design Framework: 3 Pillars of Precision

1️⃣ Define Your Task

- Clarify Goals:

- Who is the audience?

- What problem does this solve?

- What outcome is ideal?

- Break Down Steps:

Weak: "How do I start a podcast?"

Strong: "Outline a 5-step workflow for launching a tech podcast: [Topic Research, Equipment Setup, Recording, Editing, Distribution]." - Set Constraints:

Specify format (Markdown/JSON), length, or structure: *"Generate a sales JD in this template: [Job Title, Responsibilities, Qualifications, Salary Range]"*

2️⃣ Assign the AI’s Role

Guide the model to "wear the right hat" for your task:

- Role Specification: *"Act as a financial analyst specializing in startups"*

- Skill Activation: *"Use SWOT analysis to evaluate this business model"*

- Tone Control: *"Explain like I’m 15, using analogies and emojis"*

⚠️ Without role assignment, models default to generic responses—like asking a random person instead of a specialist.

3️⃣ Set Interaction Rules

- Context Injection: *"As a UX designer (background), suggest improvements for this app"*

- Examples & Counterexamples: Show desired/undesired outputs.

- Output Formatting: *"Return data as a table with columns: Tool, Use Case, Pricing"*

- Delimiters: Use

#tags to segment prompts:# Task Summarize key points from the report. # Output Rules - 3 bullet points max - Include statistics

Real-World Applications

Case 1: Educational Story Generator

# Role

Children’s science author using anthropomorphic characters.

# Task

Create an 800-word story about water cycles, structured as:

1. [Evaporation → Cloud Formation → Rainfall]

2. Characters: Rabbit protagonists

3. Style: Rhyming, playful

# Output

Markdown with sections: [Title, Story, Interactive Q&A] → Explore storytelling AI tools

Case 2: Medical Diagnosis Agent

# Role

Telemedicine doctor analyzing symptoms.

# Skills

- Identify chief complaints → Trigger symptom-checklist workflow

- Output diagnostic suggestions + recommended tests

# Constraints

Reject non-medical queries. Cite peer-reviewed sources. Pro Optimization Tactics

- The Sandwich Method: Critical instructions at the start and end (e.g., bookend role reminders).

- Synonym Swaps: Replace ambiguous terms (e.g., "efficient" → "under 5-minute response time").

- Self-Evaluation: *"Rate this output’s clarity (1-5) against [criteria] before finalizing."*

Key Takeaways

- System Prompts set boundaries; User Prompts drive actions.

- Precision requires task decomposition, role assignment, and rule definition.

- Iterate prompts like code—test, refine, and leverage patterns.

Mastering this framework turns generative AI from a novelty into a productivity powerhouse.

🔍 Curious about AI’s evolving capabilities? Explore our Trends Hub for breakthroughs in LLM interactions.