Gemini 2.5 Pro 0605 Update: A Refined Leap in General AI Reasoning

As the competition among large language models (LLMs) intensifies, Google continues to push forward with its Gemini 2.5 Pro. On June 5th, the latest version (0605) quietly launched—without the usual version suffix, possibly marking the final iteration of the Gemini 2.5 line. And while it might seem like another incremental release, the improvements this time are notably strategic and significant.

From Coding Boosts to Balanced Intelligence

In May, the Gemini 0506 update focused heavily on coding performance, a move that resonated well with developers but inadvertently weakened its overall general-purpose reasoning capabilities. Many users noted that certain benchmarks showed performance regression when compared to the March 0325 version.

Google took this feedback seriously.

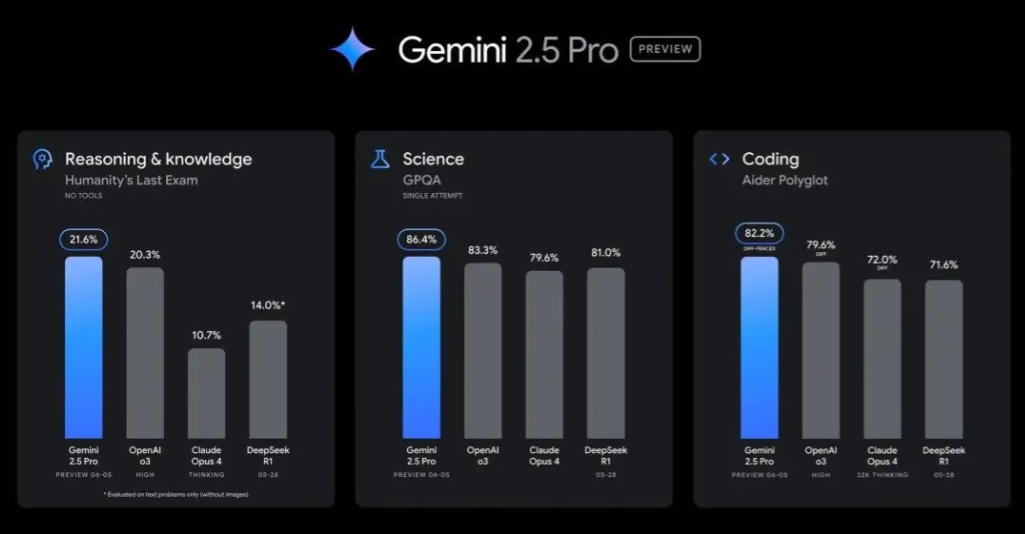

With the 0605 release, Gemini has made a clear pivot back toward broader intelligence. The model demonstrates top-tier performance on advanced benchmarks such as AIDER Polyglot, GPQA, and the Human-Level Exam (HLE)—which measure not only code generation but also mathematical reasoning, scientific understanding, and knowledge retention.

📊 LMArena Elo rating jumped by 24 points to 1470, and WebDevArena Elo rose by 35 to 1443, reinforcing Gemini’s regained balance across disciplines.

These gains place Gemini firmly in the elite circle of generalist AI models, rivaling and in some tasks even surpassing Claude 3 Opus, GPT-4, and Grok.

Creativity and Format Matter Again

Beyond raw benchmark numbers, Google has emphasized improvements in response formatting and creativity. The update enhances Gemini's ability to produce structured, well-styled outputs—a crucial skill for content creators, marketers, and educators who rely on LLMs for human-readable, publication-ready results.

For example, according to internal tests and independent evaluations, Gemini now handles aesthetically demanding prompts far better than before. A notable test involved a color extractor prompt designed to evaluate both functional performance and design sensibility. Gemini 0605 executed all the requirements in a single attempt—something even other top-tier models consistently failed to do.

This evolution isn't just about being smarter—it's about being more useful.

Pricing and Access: Still Generous, For Now

Another reason why Gemini 2.5 Pro deserves attention is its high-performance-to-cost ratio. At the time of writing, the model is still freely available via Google AI Studio, though it’s worth noting that such generosity may not last forever.

Here's a quick cost comparison for input/output tokens:

| Model | Input Token Cost | Output Token Cost |

|---|---|---|

| Gemini 2.5 Pro | ~1/8 of o3 | ~1/4 of o3 |

| Claude 4 Opus | ~10× more | ~7.5× more |

| Grok 3 | ~2× more | ~1.5× more |

When budget matters—as it often does for indie developers, students, or solo creators—Gemini provides a competitive advantage in both affordability and versatility.

Is This the Final 2.5 Version?

The removal of the version suffix hints that this could be the last official update in the Gemini 2.5 Pro line before a larger transition. Whether that next step is Gemini 3.0 or a specialized variant remains to be seen.

What’s clear is that Google is now listening more closely to feedback, tightening the gaps between coding prowess, general reasoning, and user-centric output—a triad that defines modern LLM utility.

A Model Worth Exploring

The Gemini 0605 update is more than just another patch. It reflects a refined approach to model tuning, where performance, usability, and creativity converge. For tech-savvy users, content creators, and AI enthusiasts, it's a good time to experiment with what Gemini can now offer.

🧪 Want to test it for yourself? Head to Google AI Studio while it’s still free.

And don’t forget to check out more tools in our Chatbots and Coding Assistants categories to expand your AI toolkit.