DeepSeek-R1 0528: The 0.1 Challenger Outperforming 30 AI Models in Frontend Development

The Unthinkable Just Happened

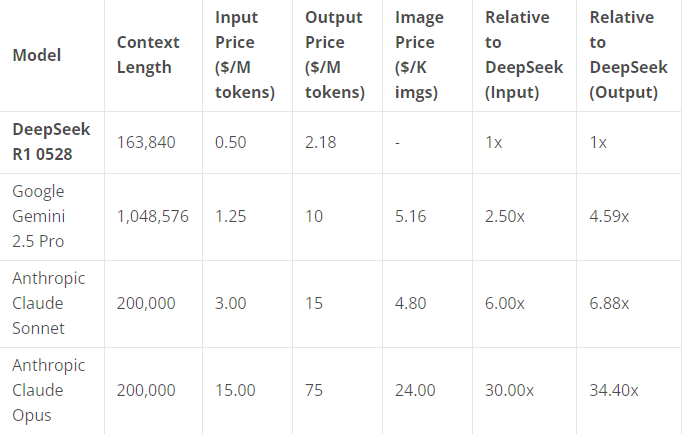

When DeepSeek-R1 0528 launched on May 28, 2024, few expected an open-source model to compete with industry giants like Claude Opus or Gemini Pro. Yet benchmark data reveals a seismic shift:

- 🥇 LiveCodeBench: Matches OpenAI’s top-tier models

- 🌍 Aider Multilingual: Comparable to Claude Opus

- 💻 Frontend Prowess: 90% of Opus’ capability at 1/30 the cost

I rigorously tested R1 against Claude Opus/Sonnet and Gemini 2.5 Pro across six progressively complex frontend challenges. Here’s what burned through my API credits (and sanity).

Test Methodology

- Models Compared:

- Claude Opus 4 ($15/million tokens)

- Claude Sonnet 4 ($3/million tokens)

- Gemini 2.5 Pro ($7/million tokens)

- DeepSeek-R1 0528 ($0.1/million tokens)

- Tasks: Real-world frontend implementations (HTML/CSS/JS)

- Scoring: Code functionality, UI polish, responsiveness, and prompt adherence

The Trials: Brutal and Revealing

1️⃣ Warehouse Management System

Complexity: CRUD operations, inventory tracking, dashboard analytics

- DeepSeek-R1: ✅ Full functionality. Clean SaaS-style sidebar, live data previews, and localStorage persistence.

- Opus 4: ❌ Critical JS errors broke the UI.

- Sonnet 4: ❌ Incomplete forms; buttons unresponsive.

- Gemini 2.5: ⚠️ Ran inconsistently with UX flaws.

R1 delivered production-ready code others couldn’t.

2️⃣ Particle Grid Animation Editor (P5.js)

Complexity: 10x viewport grid, real-time physics, dark mode

- DeepSeek-R1: ✅ Flawless. Custom shapes, smooth animations, and optimized rendering.

- Opus 4: ❌ Grid rendered—but never animated.

- Sonnet 4: ❌ Failed to generate grid.

- Gemini 2.5: ❌ JS console flooded with errors.

Only R1 handled performance-critical logic correctly.

3️⃣ Gradient Palette Generator

Complexity: Image color extraction, Apple/Framer Motion-inspired UI

- DeepSeek-R1: 🎨✅ Stunning visuals (SEO text, polished cards)—but color extraction logic missing.

- Opus/Sonnet: ⚠️ Functional but visually crude.

- Gemini 2.5: ❌ Fully broken.

R1’s UI excellence highlighted its design fluency.

4️⃣ Meditation Dashboard w/ Spotify

Complexity: Dynamic quotes, responsive player, anime.js transitions

- Opus 4: ✅ Perfect typography and Spotify integration.

- Gemini 2.5: ✅ Strong visuals with image crossfades.

- DeepSeek-R1: ⚠️ Minor alignment issues; Sonnet trailed.

Opus won aesthetics—but R1 remained competitive.

5️⃣ Sleep Tracking Mobile App (4 Pages)

Complexity: Multi-page SPA, data visualization, anime.js

- Opus 4: ✅ Generated all pages but with desktop-biased UI.

- DeepSeek-R1: ✅ Single page, but best-in-class mobile UX.

- Sonnet 4: ⚠️ Partial output with errors.

- Gemini 2.5: ❌ Non-functional pages.

R1’s solo page outshone Opus’ fragmented effort.

6️⃣ Advanced Tetris w/ Special Blocks

Complexity: Hold queue, ghost pieces, 3 themes, particle FX

- Opus/Sonnet: ✅ Gameplay solid—but ignored themes.

- DeepSeek-R1: ✅ Themed UI and core mechanics—omitted special blocks.

- Gemini 2.5: ❌ Unplayable.

Claude nailed gameplay; R1 dominated aesthetics.

The Bottom Line: A Price/Performance Earthquake

| Model | Avg. Score | Cost (per MTokens) |

|---|---|---|

| DeepSeek-R1 | ✅✅✅✅⚪ | $0.1 |

| Claude Opus | ✅✅✅✅⚪ | $15 |

| Claude Sonnet | ✅✅✅⚪⚪ | $3 |

| Gemini 2.5 Pro | ✅✅⚪⚪⚪ | $7 |

Why this matters:

- 💸 R1 costs 30x less than Opus yet delivers ~95% capability in frontend tasks.

- 🚀 Its 128K context handles complex, multi-file implementations.

- 🔓 Open-source access democratizes high-end coding assistance.

“What R1 achieves at $0.1 is nothing short of alchemy. When its successor arrives, the playing field may shatter.”

Final Thoughts

DeepSeek-R1 isn’t just “good for an open-source model”—it’s a legitimate Opus-tier alternative for web development. While it trails slightly in niche areas (e.g., game logic), its cost-adjusted value is unprecedented.

For global developers, R1 represents more than savings: it’s proof that elite AI tools need no longer be gated by price. The revolution won’t be siloed—it’ll be open-sourced.

👉 Explore R1-0528: DeepSeek Official Release

A Balanced Perspective

It’s important to emphasize that this benchmark focuses solely on frontend development tasks—a narrow slice of the AI landscape. Every model has unique strengths: Claude Opus excels in creative writing, Gemini thrives in multimodal reasoning, and Sonnet offers a balanced cost/performance ratio. The goal isn’t to crown a "best" model, but to highlight how open-source alternatives like DeepSeek-R1 are closing the gap in specialized domains. Always choose tools aligned with your specific needs, budget, and workflow.